Extra: Transformers¶

This set of notebooks first introduces the key elements for building a transformer encoder-decoder modle, namely, multi-headed narrow attention, layer normalisation, and position encoding.

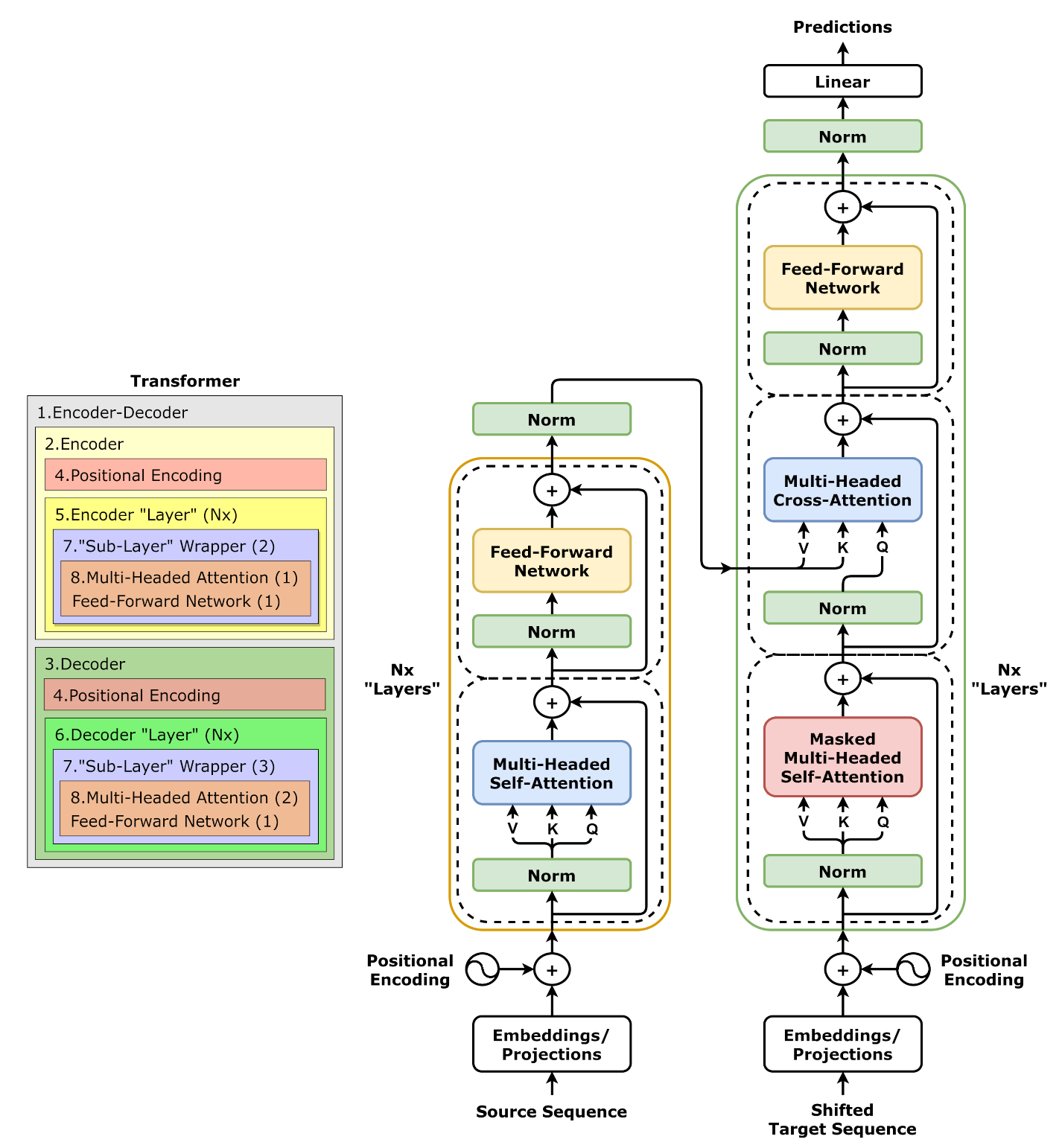

Then finally, we assemble them together to build our own Transformer Model, and compare it with the PyTorch nn.Tranformer class in the transform and roll out notebook. The picture below provides a good mapping of classes we implemented to the components used in a Transformer Encoder-Decoder Model.

Credit: the notebooks are adapted from:

Chpater 9 of Deep Learning with PyTorch Step-by-Step

Chpater 10 of Deep Learning with PyTorch Step-by-Step