4. Packed Sequences¶

Normally, a minibatch of variable-length sequences is represented numerically as rows in a matrix of integers in which each sequence is left aligned and zero-padded to accommodate the variable lengths.

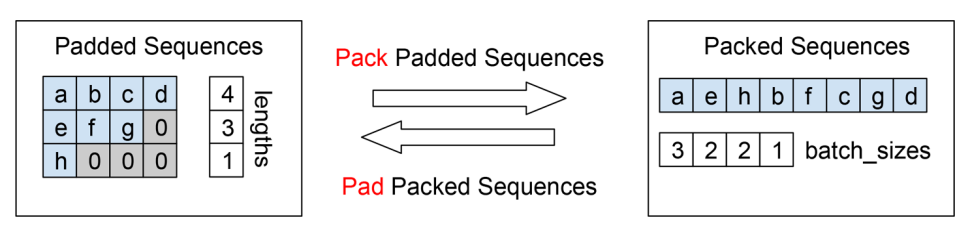

The PackedSequence data structure represents variable-length sequences as an array by concatenating the data for the sequences at each time step, one after another, and knowing the number of sequences at each time step, as shown in the figure below.

A matrix of padded sequences and its lengths are shown on the left. The padded matrix is the standard way of representing variable-length sequences, by righ-padding them with zeroes and stacking them as row vectors.

In PyTorch, we can pack the padded sequences into a terser representation, the PackedSequence, shown on the right along with the batch sizes. This representation allows the GPU to step through the sequence by keeping track of how many sequences are in each time step (the batch sizes). Using the example above,

The longest sequence is 4, so the maximum time step is 4.

The first time step, we have three sequences (

a,e, andh).The second time step, we have two sequences (

b, andf).The third time step, we have two sequences (

c, andg).The final time step, we have one sequence (

d).

import torch

import torch.nn as nn

from torch.nn.utils.rnn import pack_padded_sequence, pad_packed_sequence

def describe(x):

print("Type: {}".format(x.type()))

print("Shape/size: {}".format(x.shape))

print("Values: \n{}".format(x))

4.1. From padded to packed¶

abcd_padded = torch.tensor([1, 2, 3, 4], dtype=torch.float32)

efg_padded = torch.tensor([5, 6, 7, 0], dtype=torch.float32)

h_padded = torch.tensor([8, 0, 0, 0], dtype=torch.float32)

padded_tensor = torch.stack([abcd_padded, efg_padded, h_padded])

describe(padded_tensor)

Type: torch.FloatTensor

Shape/size: torch.Size([3, 4])

Values:

tensor([[1., 2., 3., 4.],

[5., 6., 7., 0.],

[8., 0., 0., 0.]])

lengths = [4, 3, 1]

packed_tensor = pack_padded_sequence(padded_tensor, lengths,

batch_first=True)

packed_tensor

PackedSequence(data=tensor([1., 5., 8., 2., 6., 3., 7., 4.]), batch_sizes=tensor([3, 2, 2, 1]), sorted_indices=None, unsorted_indices=None)

4.2. From packed to padded¶

You can also turn packed sequence back to padded sequence

unpacked_tensor, unpacked_lengths = pad_packed_sequence(packed_tensor, batch_first=True)

describe(unpacked_tensor)

describe(unpacked_lengths)

Type: torch.FloatTensor

Shape/size: torch.Size([3, 4])

Values:

tensor([[1., 2., 3., 4.],

[5., 6., 7., 0.],

[8., 0., 0., 0.]])

Type: torch.LongTensor

Shape/size: torch.Size([3])

Values:

tensor([4, 3, 1])