1. Word Vectors from Word-Word Coocurrence Matrix¶

Let’s take a look at how to use SVD on Word Coocurrence Matrix to obtain word vectors.

# All Import Statements Defined Here

# ----------------

import sys

assert sys.version_info[0]==3

assert sys.version_info[1] >= 5

from gensim.models import KeyedVectors

from gensim.test.utils import datapath

import pprint

import matplotlib.pyplot as plt

plt.rcParams['figure.figsize'] = [10, 5]

import nltk

nltk.download('reuters')

from nltk.corpus import reuters

import numpy as np

import random

import scipy as sp

from sklearn.decomposition import TruncatedSVD

from sklearn.decomposition import PCA

START_TOKEN = '<START>'

END_TOKEN = '<END>'

np.random.seed(0)

random.seed(0)

# ----------------

C:\ProgramData\Anaconda3\envs\cits4012\lib\site-packages\gensim\similarities\__init__.py:15: UserWarning: The gensim.similarities.levenshtein submodule is disabled, because the optional Levenshtein package <https://pypi.org/project/python-Levenshtein/> is unavailable. Install Levenhstein (e.g. `pip install python-Levenshtein`) to suppress this warning.

warnings.warn(msg)

[nltk_data] Downloading package reuters to

[nltk_data] C:\Users\wei\AppData\Roaming\nltk_data...

[nltk_data] Package reuters is already up-to-date!

Most word vector models start from the following idea:

You shall know a word by the company it keeps (Firth, J. R. 1957:11)

Many word vector implementations are driven by the idea that similar words, i.e., (near) synonyms, will be used in similar contexts. As a result, similar words will often be spoken or written along with a shared subset of words, i.e., contexts. By examining these contexts, we can try to develop embeddings for our words. With this intuition in mind, many “old school” approaches to constructing word vectors relied on word counts. Here we elaborate upon one of those strategies, co-occurrence matrices (for more information, see here or here).

1.1. Co-Occurrence Matrix - Revisited¶

A co-occurrence matrix counts how often things co-occur in some environment. Given some word \(w_i\) occurring in the document, we consider the context window surrounding \(w_i\). Supposing our fixed window size is \(n\), then this is the \(n\) preceding and \(n\) subsequent words in that document, i.e. words \(w_{i-n} \dots w_{i-1}\) and \(w_{i+1} \dots w_{i+n}\). We build a co-occurrence matrix \(M\), which is a symmetric word-by-word matrix in which \(M_{ij}\) is the number of times \(w_j\) appears inside \(w_i\)’s window among all documents.

Example: Co-Occurrence with Fixed Window of n=1:

Document 1: “all that glitters is not gold”

Document 2: “all is well that ends well”

* |

|

all |

that |

glitters |

is |

not |

gold |

well |

ends |

|

|---|---|---|---|---|---|---|---|---|---|---|

|

0 |

2 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

all |

2 |

0 |

1 |

0 |

1 |

0 |

0 |

0 |

0 |

0 |

that |

0 |

1 |

0 |

1 |

0 |

0 |

0 |

1 |

1 |

0 |

glitters |

0 |

0 |

1 |

0 |

1 |

0 |

0 |

0 |

0 |

0 |

is |

0 |

1 |

0 |

1 |

0 |

1 |

0 |

1 |

0 |

0 |

not |

0 |

0 |

0 |

0 |

1 |

0 |

1 |

0 |

0 |

0 |

gold |

0 |

0 |

0 |

0 |

0 |

1 |

0 |

0 |

0 |

1 |

well |

0 |

0 |

1 |

0 |

1 |

0 |

0 |

0 |

1 |

1 |

ends |

0 |

0 |

1 |

0 |

0 |

0 |

0 |

1 |

0 |

0 |

|

0 |

0 |

0 |

0 |

0 |

0 |

1 |

1 |

0 |

0 |

Note: In NLP, we often add <START> and <END> tokens to represent the beginning and end of sentences, paragraphs or documents. In thise case we imagine <START> and <END> tokens encapsulating each document, e.g., “<START> All that glitters is not gold <END>”, and include these tokens in our co-occurrence counts.

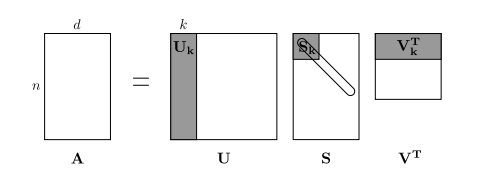

The rows (or columns) of this matrix provide one type of word vectors (those based on word-word co-occurrence), but the vectors will be large in general (linear in the number of distinct words in a corpus). Thus, our next step is to run dimensionality reduction. In particular, we will run SVD (Singular Value Decomposition), which is a kind of generalized PCA (Principal Components Analysis) to select the top \(k\) principal components. Here’s a visualization of dimensionality reduction with SVD. In this picture our co-occurrence matrix is \(A\) with \(n\) rows corresponding to \(n\) words. We obtain a full matrix decomposition, with the singular values ordered in the diagonal \(S\) matrix, and our new, shorter length-\(k\) word vectors in \(U_k\).

This reduced-dimensionality co-occurrence representation preserves semantic relationships between words, e.g. doctor and hospital will be closer than doctor and dog.

Note

If you can barely remember what an eigenvalue is, here’s a slow, friendly introduction to SVD. If you want to learn more thoroughly about PCA or SVD, feel free to check out lectures 7, 8, and 9 of CS168. These course notes provide a great high-level treatment of these general purpose algorithms. Though, for the purpose of this class, you only need to know how to extract the k-dimensional embeddings by utilizing pre-programmed implementations of these algorithms from the numpy, scipy, or sklearn python packages. In practice, it is challenging to apply full SVD to large corpora because of the memory needed to perform PCA or SVD. However, if you only want the top \(k\) vector components for relatively small \(k\) — known as Truncated SVD — then there are reasonably scalable techniques to compute those iteratively.

1.2. Plotting Co-Occurrence Word Embeddings¶

Here, we will be using the Reuters (business and financial news) corpus. If you haven’t run the import cell at the top of this page, please run it now (click it and press SHIFT-RETURN). The corpus consists of 10,788 news documents totaling 1.3 million words. These documents span 90 categories and are split into train and test. For more details, please see https://www.nltk.org/book/ch02.html. We provide a read_corpus function below that pulls out only articles from the “crude” (i.e. news articles about oil, gas, etc.) category. The function also adds <START> and <END> tokens to each of the documents, and lowercases words. You do not have to perform any other kind of pre-processing.

def read_corpus(category="crude"):

""" Read files from the specified Reuter's category.

Params:

category (string): category name

Return:

list of lists, with words from each of the processed files

"""

files = reuters.fileids(category)

return [[START_TOKEN] + [w.lower() for w in list(reuters.words(f))] + [END_TOKEN] for f in files]

Let’s have a look what these documents are like….

reuters_corpus = read_corpus()

pprint.pprint(reuters_corpus[:3], compact=True, width=100)

[['<START>', 'japan', 'to', 'revise', 'long', '-', 'term', 'energy', 'demand', 'downwards', 'the',

'ministry', 'of', 'international', 'trade', 'and', 'industry', '(', 'miti', ')', 'will', 'revise',

'its', 'long', '-', 'term', 'energy', 'supply', '/', 'demand', 'outlook', 'by', 'august', 'to',

'meet', 'a', 'forecast', 'downtrend', 'in', 'japanese', 'energy', 'demand', ',', 'ministry',

'officials', 'said', '.', 'miti', 'is', 'expected', 'to', 'lower', 'the', 'projection', 'for',

'primary', 'energy', 'supplies', 'in', 'the', 'year', '2000', 'to', '550', 'mln', 'kilolitres',

'(', 'kl', ')', 'from', '600', 'mln', ',', 'they', 'said', '.', 'the', 'decision', 'follows',

'the', 'emergence', 'of', 'structural', 'changes', 'in', 'japanese', 'industry', 'following',

'the', 'rise', 'in', 'the', 'value', 'of', 'the', 'yen', 'and', 'a', 'decline', 'in', 'domestic',

'electric', 'power', 'demand', '.', 'miti', 'is', 'planning', 'to', 'work', 'out', 'a', 'revised',

'energy', 'supply', '/', 'demand', 'outlook', 'through', 'deliberations', 'of', 'committee',

'meetings', 'of', 'the', 'agency', 'of', 'natural', 'resources', 'and', 'energy', ',', 'the',

'officials', 'said', '.', 'they', 'said', 'miti', 'will', 'also', 'review', 'the', 'breakdown',

'of', 'energy', 'supply', 'sources', ',', 'including', 'oil', ',', 'nuclear', ',', 'coal', 'and',

'natural', 'gas', '.', 'nuclear', 'energy', 'provided', 'the', 'bulk', 'of', 'japan', "'", 's',

'electric', 'power', 'in', 'the', 'fiscal', 'year', 'ended', 'march', '31', ',', 'supplying',

'an', 'estimated', '27', 'pct', 'on', 'a', 'kilowatt', '/', 'hour', 'basis', ',', 'followed',

'by', 'oil', '(', '23', 'pct', ')', 'and', 'liquefied', 'natural', 'gas', '(', '21', 'pct', '),',

'they', 'noted', '.', '<END>'],

['<START>', 'energy', '/', 'u', '.', 's', '.', 'petrochemical', 'industry', 'cheap', 'oil',

'feedstocks', ',', 'the', 'weakened', 'u', '.', 's', '.', 'dollar', 'and', 'a', 'plant',

'utilization', 'rate', 'approaching', '90', 'pct', 'will', 'propel', 'the', 'streamlined', 'u',

'.', 's', '.', 'petrochemical', 'industry', 'to', 'record', 'profits', 'this', 'year', ',',

'with', 'growth', 'expected', 'through', 'at', 'least', '1990', ',', 'major', 'company',

'executives', 'predicted', '.', 'this', 'bullish', 'outlook', 'for', 'chemical', 'manufacturing',

'and', 'an', 'industrywide', 'move', 'to', 'shed', 'unrelated', 'businesses', 'has', 'prompted',

'gaf', 'corp', '&', 'lt', ';', 'gaf', '>,', 'privately', '-', 'held', 'cain', 'chemical', 'inc',

',', 'and', 'other', 'firms', 'to', 'aggressively', 'seek', 'acquisitions', 'of', 'petrochemical',

'plants', '.', 'oil', 'companies', 'such', 'as', 'ashland', 'oil', 'inc', '&', 'lt', ';', 'ash',

'>,', 'the', 'kentucky', '-', 'based', 'oil', 'refiner', 'and', 'marketer', ',', 'are', 'also',

'shopping', 'for', 'money', '-', 'making', 'petrochemical', 'businesses', 'to', 'buy', '.', '"',

'i', 'see', 'us', 'poised', 'at', 'the', 'threshold', 'of', 'a', 'golden', 'period', ',"', 'said',

'paul', 'oreffice', ',', 'chairman', 'of', 'giant', 'dow', 'chemical', 'co', '&', 'lt', ';',

'dow', '>,', 'adding', ',', '"', 'there', "'", 's', 'no', 'major', 'plant', 'capacity', 'being',

'added', 'around', 'the', 'world', 'now', '.', 'the', 'whole', 'game', 'is', 'bringing', 'out',

'new', 'products', 'and', 'improving', 'the', 'old', 'ones', '."', 'analysts', 'say', 'the',

'chemical', 'industry', "'", 's', 'biggest', 'customers', ',', 'automobile', 'manufacturers',

'and', 'home', 'builders', 'that', 'use', 'a', 'lot', 'of', 'paints', 'and', 'plastics', ',',

'are', 'expected', 'to', 'buy', 'quantities', 'this', 'year', '.', 'u', '.', 's', '.',

'petrochemical', 'plants', 'are', 'currently', 'operating', 'at', 'about', '90', 'pct',

'capacity', ',', 'reflecting', 'tighter', 'supply', 'that', 'could', 'hike', 'product', 'prices',

'by', '30', 'to', '40', 'pct', 'this', 'year', ',', 'said', 'john', 'dosher', ',', 'managing',

'director', 'of', 'pace', 'consultants', 'inc', 'of', 'houston', '.', 'demand', 'for', 'some',

'products', 'such', 'as', 'styrene', 'could', 'push', 'profit', 'margins', 'up', 'by', 'as',

'much', 'as', '300', 'pct', ',', 'he', 'said', '.', 'oreffice', ',', 'speaking', 'at', 'a',

'meeting', 'of', 'chemical', 'engineers', 'in', 'houston', ',', 'said', 'dow', 'would', 'easily',

'top', 'the', '741', 'mln', 'dlrs', 'it', 'earned', 'last', 'year', 'and', 'predicted', 'it',

'would', 'have', 'the', 'best', 'year', 'in', 'its', 'history', '.', 'in', '1985', ',', 'when',

'oil', 'prices', 'were', 'still', 'above', '25', 'dlrs', 'a', 'barrel', 'and', 'chemical',

'exports', 'were', 'adversely', 'affected', 'by', 'the', 'strong', 'u', '.', 's', '.', 'dollar',

',', 'dow', 'had', 'profits', 'of', '58', 'mln', 'dlrs', '.', '"', 'i', 'believe', 'the',

'entire', 'chemical', 'industry', 'is', 'headed', 'for', 'a', 'record', 'year', 'or', 'close',

'to', 'it', ',"', 'oreffice', 'said', '.', 'gaf', 'chairman', 'samuel', 'heyman', 'estimated',

'that', 'the', 'u', '.', 's', '.', 'chemical', 'industry', 'would', 'report', 'a', '20', 'pct',

'gain', 'in', 'profits', 'during', '1987', '.', 'last', 'year', ',', 'the', 'domestic',

'industry', 'earned', 'a', 'total', 'of', '13', 'billion', 'dlrs', ',', 'a', '54', 'pct', 'leap',

'from', '1985', '.', 'the', 'turn', 'in', 'the', 'fortunes', 'of', 'the', 'once', '-', 'sickly',

'chemical', 'industry', 'has', 'been', 'brought', 'about', 'by', 'a', 'combination', 'of', 'luck',

'and', 'planning', ',', 'said', 'pace', "'", 's', 'john', 'dosher', '.', 'dosher', 'said', 'last',

'year', "'", 's', 'fall', 'in', 'oil', 'prices', 'made', 'feedstocks', 'dramatically', 'cheaper',

'and', 'at', 'the', 'same', 'time', 'the', 'american', 'dollar', 'was', 'weakening', 'against',

'foreign', 'currencies', '.', 'that', 'helped', 'boost', 'u', '.', 's', '.', 'chemical',

'exports', '.', 'also', 'helping', 'to', 'bring', 'supply', 'and', 'demand', 'into', 'balance',

'has', 'been', 'the', 'gradual', 'market', 'absorption', 'of', 'the', 'extra', 'chemical',

'manufacturing', 'capacity', 'created', 'by', 'middle', 'eastern', 'oil', 'producers', 'in',

'the', 'early', '1980s', '.', 'finally', ',', 'virtually', 'all', 'major', 'u', '.', 's', '.',

'chemical', 'manufacturers', 'have', 'embarked', 'on', 'an', 'extensive', 'corporate',

'restructuring', 'program', 'to', 'mothball', 'inefficient', 'plants', ',', 'trim', 'the',

'payroll', 'and', 'eliminate', 'unrelated', 'businesses', '.', 'the', 'restructuring', 'touched',

'off', 'a', 'flurry', 'of', 'friendly', 'and', 'hostile', 'takeover', 'attempts', '.', 'gaf', ',',

'which', 'made', 'an', 'unsuccessful', 'attempt', 'in', '1985', 'to', 'acquire', 'union',

'carbide', 'corp', '&', 'lt', ';', 'uk', '>,', 'recently', 'offered', 'three', 'billion', 'dlrs',

'for', 'borg', 'warner', 'corp', '&', 'lt', ';', 'bor', '>,', 'a', 'chicago', 'manufacturer',

'of', 'plastics', 'and', 'chemicals', '.', 'another', 'industry', 'powerhouse', ',', 'w', '.',

'r', '.', 'grace', '&', 'lt', ';', 'gra', '>', 'has', 'divested', 'its', 'retailing', ',',

'restaurant', 'and', 'fertilizer', 'businesses', 'to', 'raise', 'cash', 'for', 'chemical',

'acquisitions', '.', 'but', 'some', 'experts', 'worry', 'that', 'the', 'chemical', 'industry',

'may', 'be', 'headed', 'for', 'trouble', 'if', 'companies', 'continue', 'turning', 'their',

'back', 'on', 'the', 'manufacturing', 'of', 'staple', 'petrochemical', 'commodities', ',', 'such',

'as', 'ethylene', ',', 'in', 'favor', 'of', 'more', 'profitable', 'specialty', 'chemicals',

'that', 'are', 'custom', '-', 'designed', 'for', 'a', 'small', 'group', 'of', 'buyers', '.', '"',

'companies', 'like', 'dupont', '&', 'lt', ';', 'dd', '>', 'and', 'monsanto', 'co', '&', 'lt', ';',

'mtc', '>', 'spent', 'the', 'past', 'two', 'or', 'three', 'years', 'trying', 'to', 'get', 'out',

'of', 'the', 'commodity', 'chemical', 'business', 'in', 'reaction', 'to', 'how', 'badly', 'the',

'market', 'had', 'deteriorated', ',"', 'dosher', 'said', '.', '"', 'but', 'i', 'think', 'they',

'will', 'eventually', 'kill', 'the', 'margins', 'on', 'the', 'profitable', 'chemicals', 'in',

'the', 'niche', 'market', '."', 'some', 'top', 'chemical', 'executives', 'share', 'the',

'concern', '.', '"', 'the', 'challenge', 'for', 'our', 'industry', 'is', 'to', 'keep', 'from',

'getting', 'carried', 'away', 'and', 'repeating', 'past', 'mistakes', ',"', 'gaf', "'", 's',

'heyman', 'cautioned', '.', '"', 'the', 'shift', 'from', 'commodity', 'chemicals', 'may', 'be',

'ill', '-', 'advised', '.', 'specialty', 'businesses', 'do', 'not', 'stay', 'special', 'long',

'."', 'houston', '-', 'based', 'cain', 'chemical', ',', 'created', 'this', 'month', 'by', 'the',

'sterling', 'investment', 'banking', 'group', ',', 'believes', 'it', 'can', 'generate', '700',

'mln', 'dlrs', 'in', 'annual', 'sales', 'by', 'bucking', 'the', 'industry', 'trend', '.',

'chairman', 'gordon', 'cain', ',', 'who', 'previously', 'led', 'a', 'leveraged', 'buyout', 'of',

'dupont', "'", 's', 'conoco', 'inc', "'", 's', 'chemical', 'business', ',', 'has', 'spent', '1',

'.', '1', 'billion', 'dlrs', 'since', 'january', 'to', 'buy', 'seven', 'petrochemical', 'plants',

'along', 'the', 'texas', 'gulf', 'coast', '.', 'the', 'plants', 'produce', 'only', 'basic',

'commodity', 'petrochemicals', 'that', 'are', 'the', 'building', 'blocks', 'of', 'specialty',

'products', '.', '"', 'this', 'kind', 'of', 'commodity', 'chemical', 'business', 'will', 'never',

'be', 'a', 'glamorous', ',', 'high', '-', 'margin', 'business', ',"', 'cain', 'said', ',',

'adding', 'that', 'demand', 'is', 'expected', 'to', 'grow', 'by', 'about', 'three', 'pct',

'annually', '.', 'garo', 'armen', ',', 'an', 'analyst', 'with', 'dean', 'witter', 'reynolds', ',',

'said', 'chemical', 'makers', 'have', 'also', 'benefitted', 'by', 'increasing', 'demand', 'for',

'plastics', 'as', 'prices', 'become', 'more', 'competitive', 'with', 'aluminum', ',', 'wood',

'and', 'steel', 'products', '.', 'armen', 'estimated', 'the', 'upturn', 'in', 'the', 'chemical',

'business', 'could', 'last', 'as', 'long', 'as', 'four', 'or', 'five', 'years', ',', 'provided',

'the', 'u', '.', 's', '.', 'economy', 'continues', 'its', 'modest', 'rate', 'of', 'growth', '.',

'<END>'],

['<START>', 'turkey', 'calls', 'for', 'dialogue', 'to', 'solve', 'dispute', 'turkey', 'said',

'today', 'its', 'disputes', 'with', 'greece', ',', 'including', 'rights', 'on', 'the',

'continental', 'shelf', 'in', 'the', 'aegean', 'sea', ',', 'should', 'be', 'solved', 'through',

'negotiations', '.', 'a', 'foreign', 'ministry', 'statement', 'said', 'the', 'latest', 'crisis',

'between', 'the', 'two', 'nato', 'members', 'stemmed', 'from', 'the', 'continental', 'shelf',

'dispute', 'and', 'an', 'agreement', 'on', 'this', 'issue', 'would', 'effect', 'the', 'security',

',', 'economy', 'and', 'other', 'rights', 'of', 'both', 'countries', '.', '"', 'as', 'the',

'issue', 'is', 'basicly', 'political', ',', 'a', 'solution', 'can', 'only', 'be', 'found', 'by',

'bilateral', 'negotiations', ',"', 'the', 'statement', 'said', '.', 'greece', 'has', 'repeatedly',

'said', 'the', 'issue', 'was', 'legal', 'and', 'could', 'be', 'solved', 'at', 'the',

'international', 'court', 'of', 'justice', '.', 'the', 'two', 'countries', 'approached', 'armed',

'confrontation', 'last', 'month', 'after', 'greece', 'announced', 'it', 'planned', 'oil',

'exploration', 'work', 'in', 'the', 'aegean', 'and', 'turkey', 'said', 'it', 'would', 'also',

'search', 'for', 'oil', '.', 'a', 'face', '-', 'off', 'was', 'averted', 'when', 'turkey',

'confined', 'its', 'research', 'to', 'territorrial', 'waters', '.', '"', 'the', 'latest',

'crises', 'created', 'an', 'historic', 'opportunity', 'to', 'solve', 'the', 'disputes', 'between',

'the', 'two', 'countries', ',"', 'the', 'foreign', 'ministry', 'statement', 'said', '.', 'turkey',

"'", 's', 'ambassador', 'in', 'athens', ',', 'nazmi', 'akiman', ',', 'was', 'due', 'to', 'meet',

'prime', 'minister', 'andreas', 'papandreou', 'today', 'for', 'the', 'greek', 'reply', 'to', 'a',

'message', 'sent', 'last', 'week', 'by', 'turkish', 'prime', 'minister', 'turgut', 'ozal', '.',

'the', 'contents', 'of', 'the', 'message', 'were', 'not', 'disclosed', '.', '<END>']]

1.3. Find distinct_words¶

Let’s write a method to work out the distinct words (word types) that occur in the corpus. You can do this with for loops, but it’s more efficient to do it with Python list comprehensions. In particular, this may be useful to flatten a list of lists. If you’re not familiar with Python list comprehensions in general, here’s more information.

You may find it useful to use Python sets to remove duplicate words.

def distinct_words(corpus):

""" Determine a list of distinct words for the corpus.

Params:

corpus (list of list of strings): corpus of documents

Return:

corpus_words (list of strings): list of distinct words across the corpus, sorted (using python 'sorted' function)

num_corpus_words (integer): number of distinct words across the corpus

"""

corpus_words = []

num_corpus_words = -1

# ------------------

# Write your implementation here.

corpus_words = sorted(list(set([y for x in corpus for y in x])))

# corpus_words = [y for x in corpus for y in x]

# corpus_words = list(set(corpus_words)) # unique words

# corpus_words = sorted(corpus_words) # sorts

num_corpus_words = len(corpus_words)

# ------------------

return corpus_words, num_corpus_words

{Test-driven coding practice}

A good practice is to write test code to validate your program output against expected output.

# ---------------------

# Run this sanity check

# Note that this not an exhaustive check for correctness.

# Very simple tokenization using the Python string split

# with space - string.split(" ").

# ---------------------

# Define toy corpus

test_corpus = ["{} All that glitters isn't gold {}".format(START_TOKEN, END_TOKEN).split(" "), "{} All's well that ends well {}".format(START_TOKEN, END_TOKEN).split(" ")]

test_corpus_words, num_corpus_words = distinct_words(test_corpus)

# Correct answers

ans_test_corpus_words = sorted([START_TOKEN, "All", "ends", "that", "gold", "All's", "glitters", "isn't", "well", END_TOKEN])

ans_num_corpus_words = len(ans_test_corpus_words)

# Test correct number of words

assert(num_corpus_words == ans_num_corpus_words), "Incorrect number of distinct words. Correct: {}. Yours: {}".format(ans_num_corpus_words, num_corpus_words)

# Test correct words

assert (test_corpus_words == ans_test_corpus_words), "Incorrect corpus_words.\nCorrect: {}\nYours: {}".format(str(ans_test_corpus_words), str(test_corpus_words))

# Print Success

print ("-" * 80)

print("Passed All Tests!")

print ("-" * 80)

--------------------------------------------------------------------------------

Passed All Tests!

--------------------------------------------------------------------------------

1.4. compute_co_occurrence_matrix¶

Write a method that constructs a co-occurrence matrix for a certain window-size \(n\) (with a default of 4), considering words \(n\) before and \(n\) after the word in the center of the window. Here, we start to use numpy (np) to represent vectors, matrices, and tensors. If you’re not familiar with NumPy, there’s a NumPy tutorial Python NumPy tutorial.

def compute_co_occurrence_matrix(corpus, window_size=4):

""" Compute co-occurrence matrix for the given corpus and window_size (default of 4).

Note: Each word in a document should be at the center of a window. Words near edges will have a smaller

number of co-occurring words.

For example, if we take the document "<START> All that glitters is not gold <END>" with window size of 4,

"All" will co-occur with "<START>", "that", "glitters", "is", and "not".

Params:

corpus (list of list of strings): corpus of documents

window_size (int): size of context window

Return:

M (a symmetric numpy matrix of shape (number of unique words in the corpus , number of unique words in the corpus)):

Co-occurence matrix of word counts.

The ordering of the words in the rows/columns should be the same as the ordering of the words given by the distinct_words function.

word2Ind (dict): dictionary that maps word to index (i.e. row/column number) for matrix M.

"""

words, num_words = distinct_words(corpus)

M = None

word2Ind = {}

# ------------------

M = np.zeros((num_words, num_words))

word2Ind = {w : i for i, w in enumerate(words)}

for sentence in corpus:

for cen_w_i, cen_w in enumerate(sentence): # center word

for con_w_i in range(cen_w_i-window_size, cen_w_i+window_size+1): # context word

if(cen_w_i!=con_w_i and con_w_i>=0 and con_w_i<len(sentence)):

ce = word2Ind[cen_w]

co = word2Ind[sentence[con_w_i]]

M[ce][co] = M[ce][co] + 1

# ------------------

return M, word2Ind

# ---------------------

# Run this sanity check

# Note that this is not an exhaustive check for correctness.

# ---------------------

# Define toy corpus and get student's co-occurrence matrix

test_corpus = ["{} All that glitters isn't gold {}".format(START_TOKEN, END_TOKEN).split(" "), "{} All's well that ends well {}".format(START_TOKEN, END_TOKEN).split(" ")]

M_test, word2Ind_test = compute_co_occurrence_matrix(test_corpus, window_size=1)

# Correct M and word2Ind

M_test_ans = np.array(

[[0., 0., 0., 0., 0., 0., 1., 0., 0., 1.,],

[0., 0., 1., 1., 0., 0., 0., 0., 0., 0.,],

[0., 1., 0., 0., 0., 0., 0., 0., 1., 0.,],

[0., 1., 0., 0., 0., 0., 0., 0., 0., 1.,],

[0., 0., 0., 0., 0., 0., 0., 0., 1., 1.,],

[0., 0., 0., 0., 0., 0., 0., 1., 1., 0.,],

[1., 0., 0., 0., 0., 0., 0., 1., 0., 0.,],

[0., 0., 0., 0., 0., 1., 1., 0., 0., 0.,],

[0., 0., 1., 0., 1., 1., 0., 0., 0., 1.,],

[1., 0., 0., 1., 1., 0., 0., 0., 1., 0.,]]

)

ans_test_corpus_words = sorted([START_TOKEN, "All", "ends", "that", "gold", "All's", "glitters", "isn't", "well", END_TOKEN])

word2Ind_ans = dict(zip(ans_test_corpus_words, range(len(ans_test_corpus_words))))

# Test correct word2Ind

assert (word2Ind_ans == word2Ind_test), "Your word2Ind is incorrect:\nCorrect: {}\nYours: {}".format(word2Ind_ans, word2Ind_test)

# Test correct M shape

assert (M_test.shape == M_test_ans.shape), "M matrix has incorrect shape.\nCorrect: {}\nYours: {}".format(M_test.shape, M_test_ans.shape)

# Test correct M values

for w1 in word2Ind_ans.keys():

idx1 = word2Ind_ans[w1]

for w2 in word2Ind_ans.keys():

idx2 = word2Ind_ans[w2]

student = M_test[idx1, idx2]

correct = M_test_ans[idx1, idx2]

if student != correct:

print("Correct M:")

print(M_test_ans)

print("Your M: ")

print(M_test)

raise AssertionError("Incorrect count at index ({}, {})=({}, {}) in matrix M. Yours has {} but should have {}.".format(idx1, idx2, w1, w2, student, correct))

# Print Success

print ("-" * 80)

print("Passed All Tests!")

print ("-" * 80)

--------------------------------------------------------------------------------

Passed All Tests!

--------------------------------------------------------------------------------

1.5. Using SVD to reduce_to_k_dim¶

Now let’s construct a method that performs dimensionality reduction on the matrix to produce k-dimensional embeddings. Use SVD to take the top k components and produce a new matrix of k-dimensional embeddings.

Note

All of numpy, scipy, and scikit-learn (sklearn) provide some implementation of SVD, but only scipy and sklearn provide an implementation of Truncated SVD, and only sklearn provides an efficient randomized algorithm for calculating large-scale Truncated SVD. So please use sklearn.decomposition.TruncatedSVD.

import sklearn

def reduce_to_k_dim(M, k=2):

""" Reduce a co-occurence count matrix of dimensionality (num_corpus_words, num_corpus_words)

to a matrix of dimensionality (num_corpus_words, k) using the following SVD function from Scikit-Learn:

- http://scikit-learn.org/stable/modules/generated/sklearn.decomposition.TruncatedSVD.html

Params:

M (numpy matrix of shape (number of unique words in the corpus , number of unique words in the corpus)): co-occurence matrix of word counts

k (int): embedding size of each word after dimension reduction

Return:

M_reduced (numpy matrix of shape (number of corpus words, k)): matrix of k-dimensioal word embeddings.

In terms of the SVD from math class, this actually returns U * S

"""

n_iters = 10 # Use this parameter in your call to `TruncatedSVD`

M_reduced = None

print("Running Truncated SVD over %i words..." % (M.shape[0]))

# ------------------

# Write your implementation here.

M_r = sklearn.decomposition.TruncatedSVD(n_components=k,

algorithm='randomized',

n_iter=n_iters,

random_state=None,

tol=0.0)

M_reduced = M_r.fit_transform(M)

# ------------------

print("Done.")

return M_reduced

# ---------------------

# Run this sanity check

# Note that this is not an exhaustive check for correctness

# In fact we only check that your M_reduced has the right dimensions.

# ---------------------

# Define toy corpus and run student code

test_corpus = ["{} All that glitters isn't gold {}".format(START_TOKEN, END_TOKEN).split(" "), "{} All's well that ends well {}".format(START_TOKEN, END_TOKEN).split(" ")]

M_test, word2Ind_test = compute_co_occurrence_matrix(test_corpus, window_size=1)

M_test_reduced = reduce_to_k_dim(M_test, k=2)

# Test proper dimensions

assert (M_test_reduced.shape[0] == 10), "M_reduced has {} rows; should have {}".format(M_test_reduced.shape[0], 10)

assert (M_test_reduced.shape[1] == 2), "M_reduced has {} columns; should have {}".format(M_test_reduced.shape[1], 2)

# Print Success

print ("-" * 80)

print("Passed All Tests!")

print ("-" * 80)

Running Truncated SVD over 10 words...

Done.

--------------------------------------------------------------------------------

Passed All Tests!

--------------------------------------------------------------------------------

1.6. Visualise the embeddings using plot_embeddings¶

We can now write a function to plot a set of 2D vectors in 2D space. For graphs, we will use Matplotlib (plt).

For this example, you may find it useful to adapt this code. In the future, a good way to make a plot is to look at the Matplotlib gallery, find a plot that looks somewhat like what you want, and adapt the code they give.

def plot_embeddings(M_reduced, word2Ind, words):

""" Plot in a scatterplot the embeddings of the words specified in the list "words".

NOTE: do not plot all the words listed in M_reduced / word2Ind.

Include a label next to each point.

Params:

M_reduced (numpy matrix of shape (number of unique words in the corpus , 2)): matrix of 2-dimensioal word embeddings

word2Ind (dict): dictionary that maps word to indices for matrix M

words (list of strings): words whose embeddings we want to visualize

"""

# ------------------

import matplotlib.pyplot as plt

x_coords = M_reduced[:,0]

y_coords = M_reduced[:,1]

for word in words:

i = word2Ind[word]

x = x_coords[i]

y = y_coords[i]

plt.scatter(x, y, color='r', marker='x')

plt.annotate(word, (x, y))

plt.show()

# ------------------

# ---------------------

# Run this sanity check

# Note that this is not an exhaustive check for correctness.

# The plot produced should look like the "test solution plot" depicted below.

# ---------------------

print ("-" * 80)

print ("Outputted Plot:")

M_reduced_plot_test = np.array([[1, 1], [-1, -1], [1, -1], [-1, 1], [0, 0]])

word2Ind_plot_test = {'test1': 0, 'test2': 1, 'test3': 2, 'test4': 3, 'test5': 4}

words = ['test1', 'test2', 'test3', 'test4', 'test5']

plot_embeddings(M_reduced_plot_test, word2Ind_plot_test, words)

print ("-" * 80)

--------------------------------------------------------------------------------

Outputted Plot:

--------------------------------------------------------------------------------